Have you heard these claims?

“Artificial General Intelligence (AGI) is imminent!”

or

“At current rate of progress, AGI is inevitable!”

In a recent preprint, my co-authors and I explain why, and present a formal proof that, such claims are false and misleading.

(…) the field of AI is in the grip of a dominant paradigm that pushes a narrative that AI technology is so massively successful that, if we keep progressing at our current pace, then AGI will inevitably arrive in the near future.

van Rooij, Guest, Adolfi, de Haan, Kolokolova, & Rich (2023)

We present an imaginary scenario, with a hypothetical AI-engineer called Dr. Ingenia, and formalise their task of trying to create human (-like or level) cognition in machines (a.k.a. AGI) by training on human data.

(…) we reveal why claims of the inevitability of AGI walk on the quicksand of computational intractability.

van Rooij, Guest, Adolfi, de Haan, Kolokolova, & Rich (2023)

In the imaginary scenario, Dr. Ingenia has ideal conditions, access to all possible machine-learning methods, perfect data, and the ability to sample without bias.

This should be easy, right?

Not really.

Even under the ideal conditions, and even for an extremely low bar for approximation, Dr. Ingenia’s engineering problem turns out computationally intractable (formally, NP-hard under randomised reductions).

This gives a lower bound on the real-world complexity of creating AGI by machine learning, and thus that is intractable, too.

(…) to support the inevitability claim one would have to put forth a set of arguments, logically and mathematically sound, to prove not only that such a tractable procedure can exist, but also that one has it. Theorem 2 (the Ingenia Theorem) shows that this is impossible.

van Rooij, Guest, Adolfi, de Haan, Kolokolova, & Rich (2023)

This means that any model produced in the short run is but a “decoy” (see banner image). It also means that AGI cannot be imminent or inevitable (see quote above).

For full details of the theorem, proof and its implications, see the full paper:

- van Rooij, I., Guest, O., Adolfi, F. G., de Haan, R., Kolokolova, A., & Rich, P. (2023). Reclaiming AI as a theoretical tool for cognitive science. https://doi.org/10.31234/osf.io/4cbuv

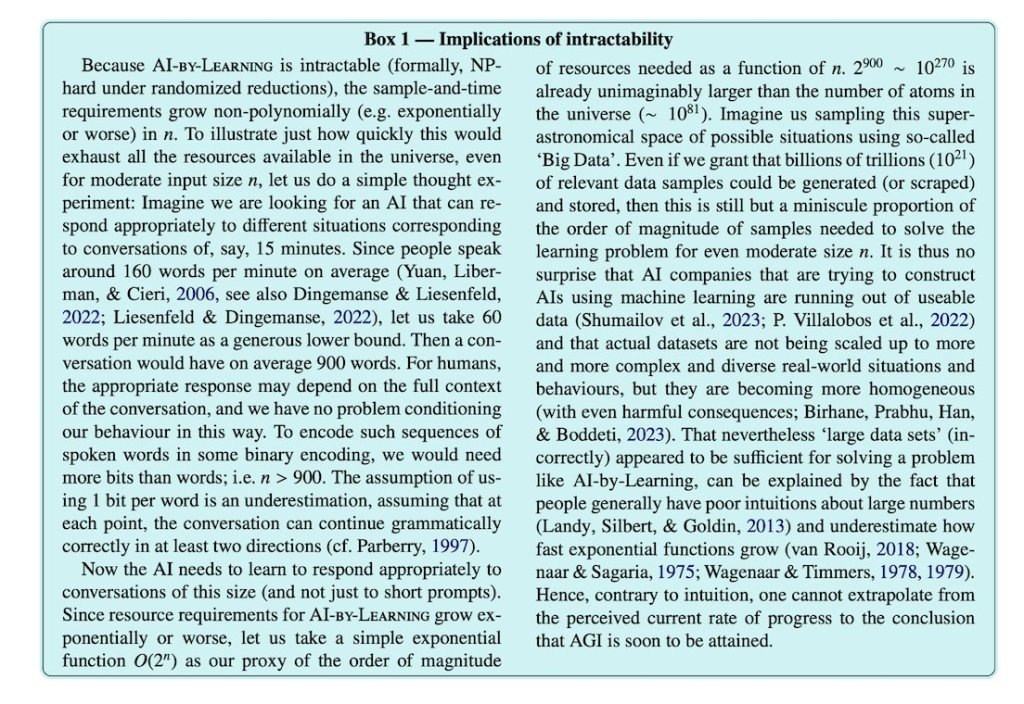

Box 1 in the paper also illustrates some of the implications.

Update (14-8-2024): The paper is now forthcoming in the journal Computational Brain & Behavior, one of the journals of the Society for Mathematical Psychology.

Further reading

- van Rooij (2020). Sampling cannot make hard work light. Blogpost.

- van Rooij, I., Blokpoel, M., Kwisthout, J., Wareham, T. (2019). Intractability and Cognition: A guide to classical and parameterized complexity analysis. Cambridge: Cambridge University Press.

- van Rooij (2018). Water lilies. Blogpost.

- A thread with an analysis of common objections to the proof.